CPPN AI art project

late 2018

This was an abstract AI art project that I worked on during a brief gap after a startup was acquired but before we started the new company.

This was a few years before AI art really took off, and advanced prompt-based AI art models were widely available.

About CPPNs

A compositional pattern producing network

(CPPN)

is a neural network formulated as an

The term CPPN originates from a 2007 paper which uses them with genetic algorithms to "breed" networks to produce complex images.

The use of CPPNs for art is related to a technique that dates

to at least a

1991 paper by Karl Sims

which uses genetic algorithms to breed

We do not use genetic algorithms in this project, so I won't go into these details. Instead, we will use gradient-based optimization (i.e. ordinary neural net training) to produce images.

Training a CPPN

To train a CPPN, we evaluate the network over a grid of

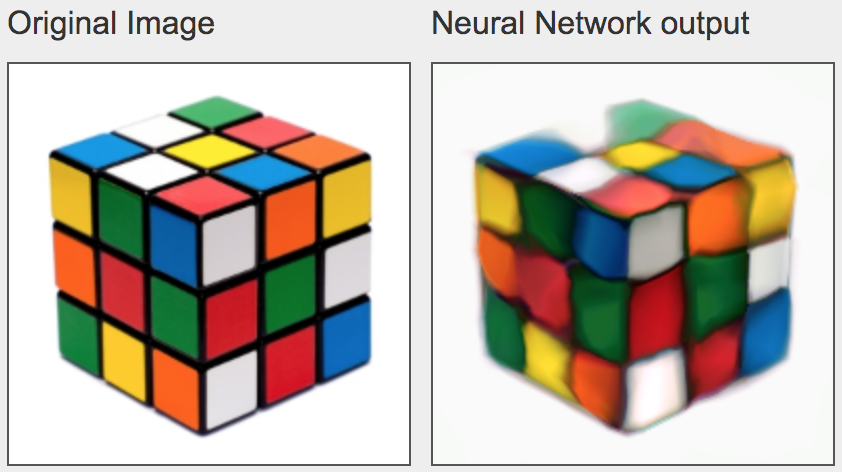

For example, Andrej Karpathy has a great in-browser demo of fitting a CPPN to a target image to "draw" that image.

ConvNetJS

CPPN demo

drawing a rubik's cube.

ConvNetJS

CPPN demo

drawing a rubik's cube.

The CPPN formulation has a few nice properties. First, optimizing this kind of network produces an artistic effect similar to drawing or painting, with interesting distortions. Second, there is no inherent resolution to a CPPN, allowing us to perform final inference to render an image at a higher resolution than we use at training time. This allows rendering higher resolution art than was practical at the time.

Making "intersting" images

The challenge is to choose an objective to fit a CPPN such that the model creates something interesting.

For this project, I used a pretrained ImageNet model (Inception v3) as a "critic" to judge whether images are interesting. I choose a specific feature -- a channel from a layer within the pretrained network -- as the target, and optimize the CPPN to excite that feature. This objective is similar to that used in Differentiable Image Parameterizations by Mordvintsev et al.

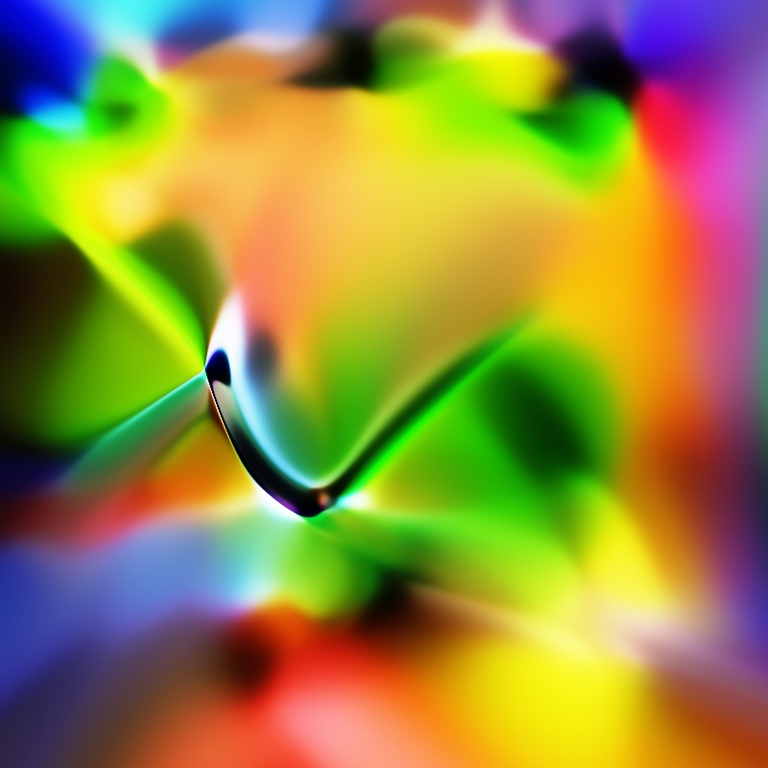

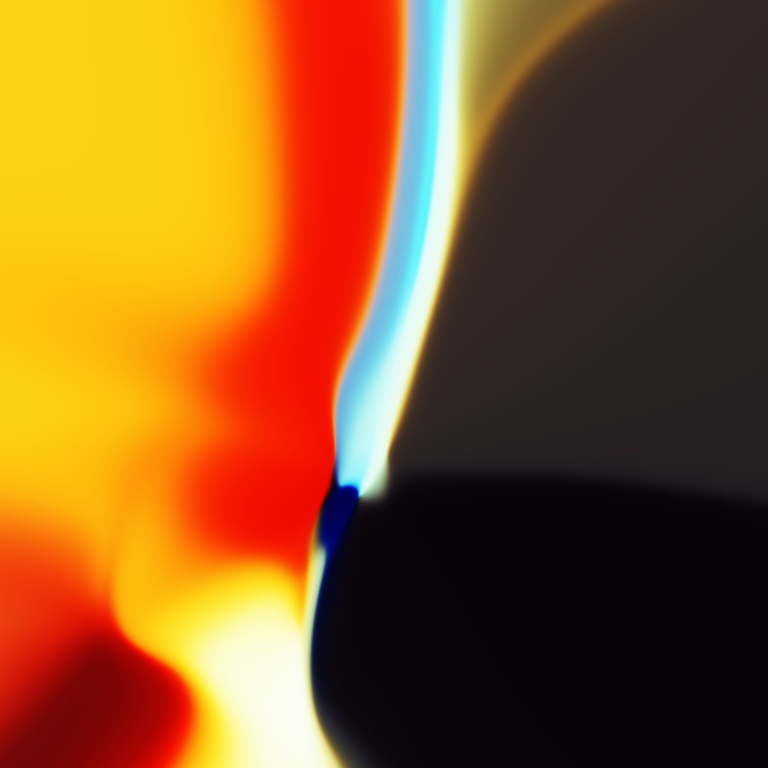

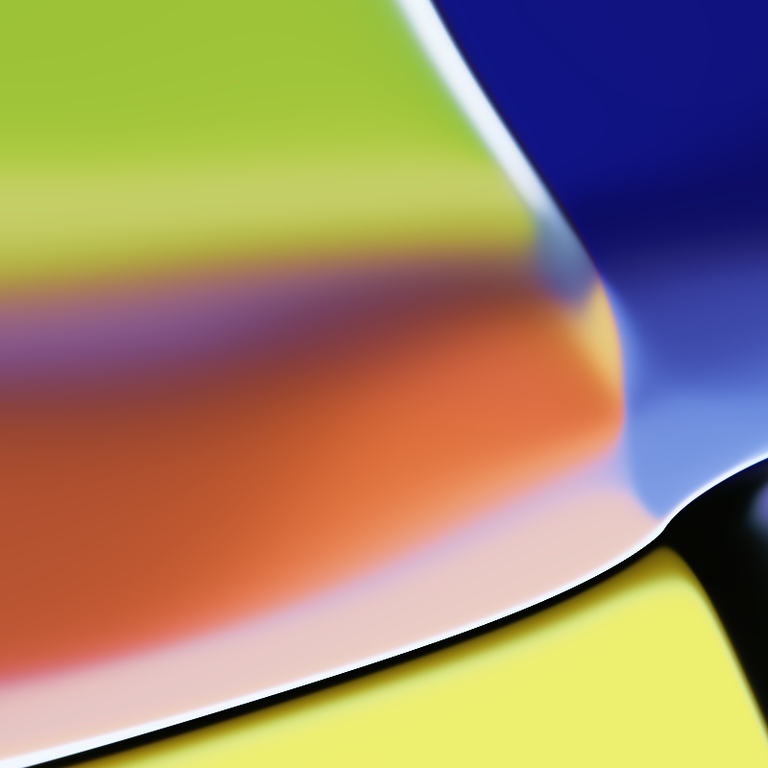

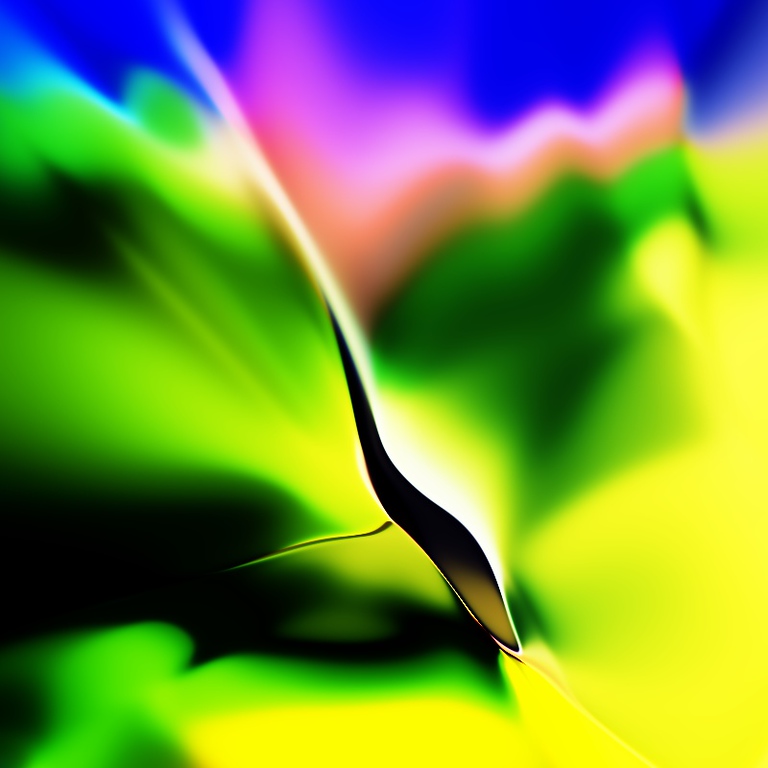

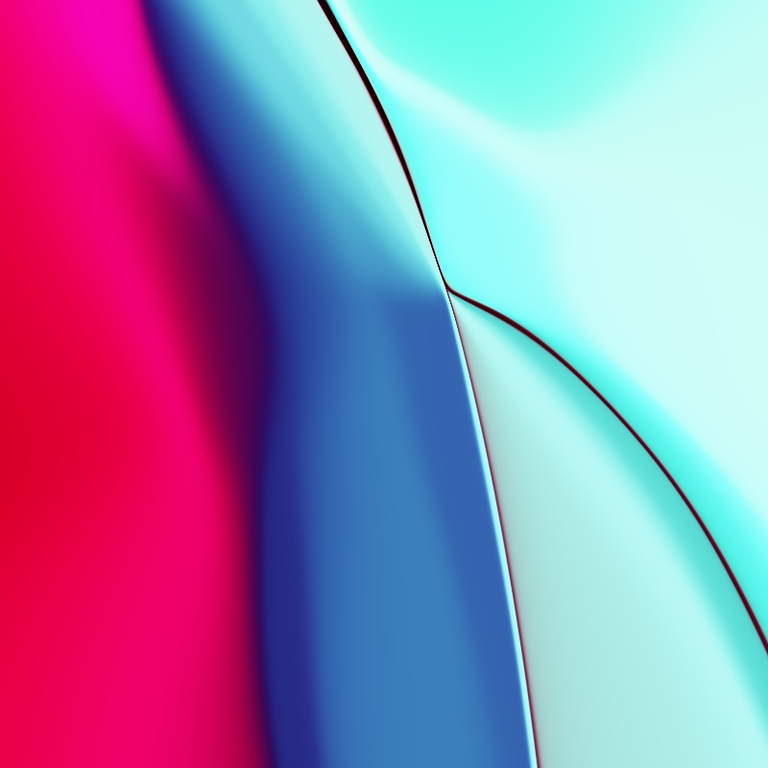

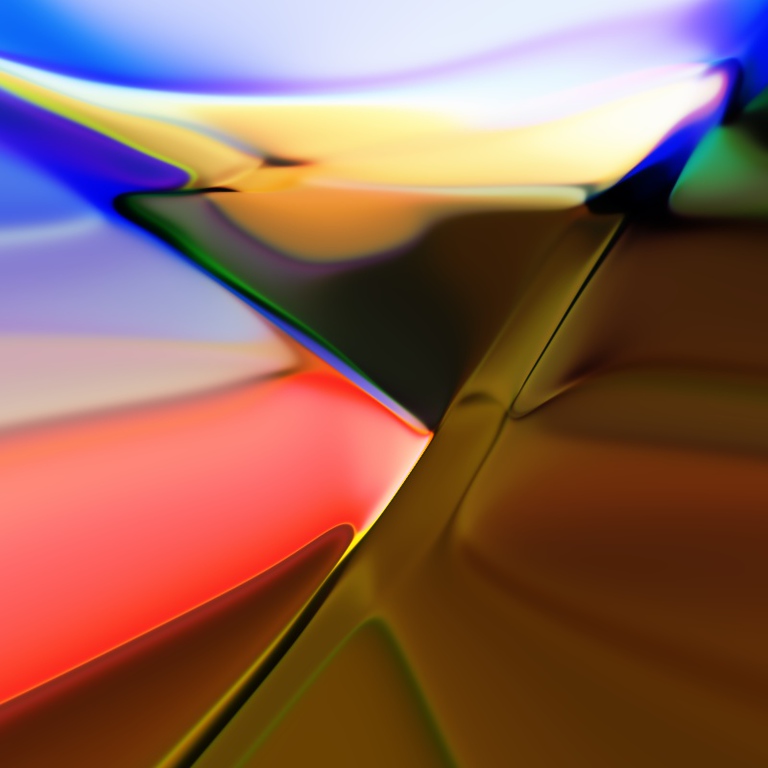

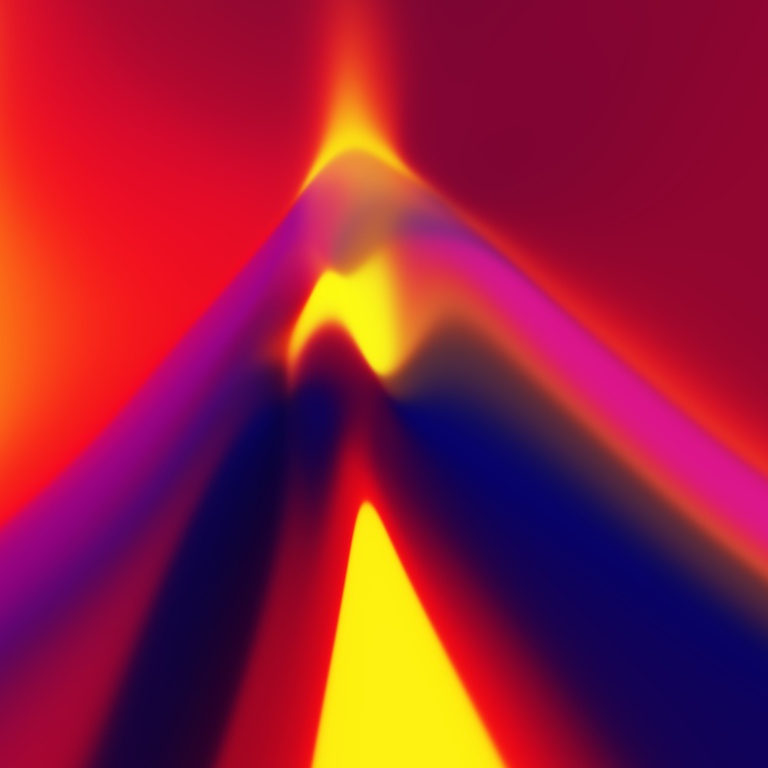

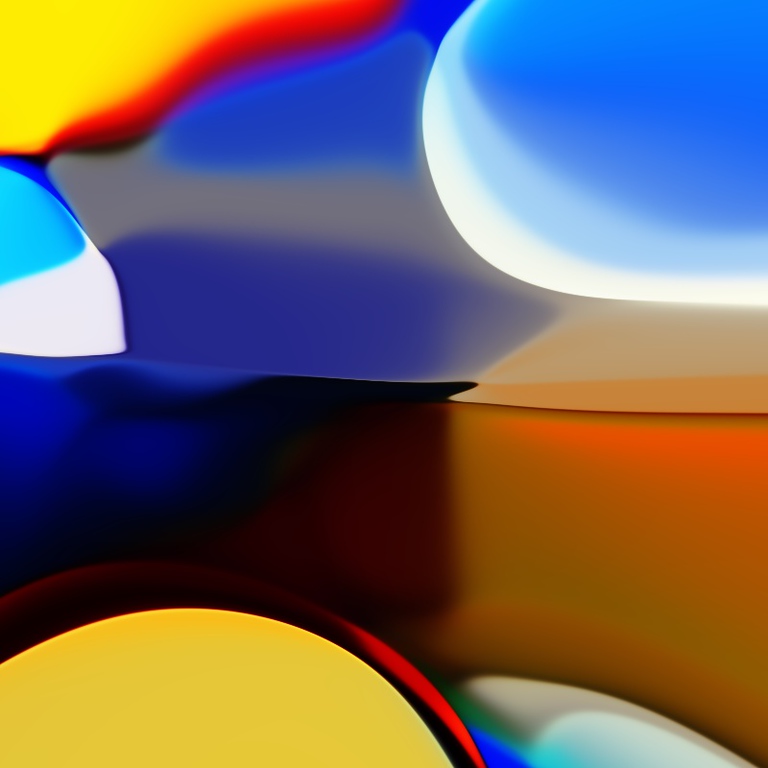

Once the CPPN model converges, it can be used to render a larger high-resolution image using the CPPN model. The process produces abstract images that don't quite look like anything else:

An example CPPN image from this project.

An example CPPN image from this project.

Each image is the result of a single CPPN training run, training the CPPN network from initialization. I'd generate one image each from a range of features within a layer, and take note of features that produce interesting results. I'd then generate several images from those features.

Observations

Different model architecture choices (eg. activation type and depth), and perhaps even the choice of optimizer, can produce different artistic effects. I preferred images generated by tanh networks to those generated by ReLU networks, for instance. Different layers of the target network also have different aesthetic qualities.

Training multiple networks on the same feature from the target model produces images that have a similar texture, as if producing a series of images for a collection.

I worked on this several years ago now, so I'm working from memory, and I no longer have ablative examples for the various design choices I settled on.

Gallery

Related links

- Differentiable Image Parameterizations (Mordvintsev et al, Google AI)

- Generative Art with CPPN-GANs (Kevin Jiang)

- David Ha has several posts on CPPN art: Neurogram, Generating Large Images from Latent Vectors, and a gallery of images from CPPN projects.